TL;DR: The first benchmark explicitly designed to evaluate the spatiotemporal (4D) reasoning capabilities of Vision Language Models (VLMs).

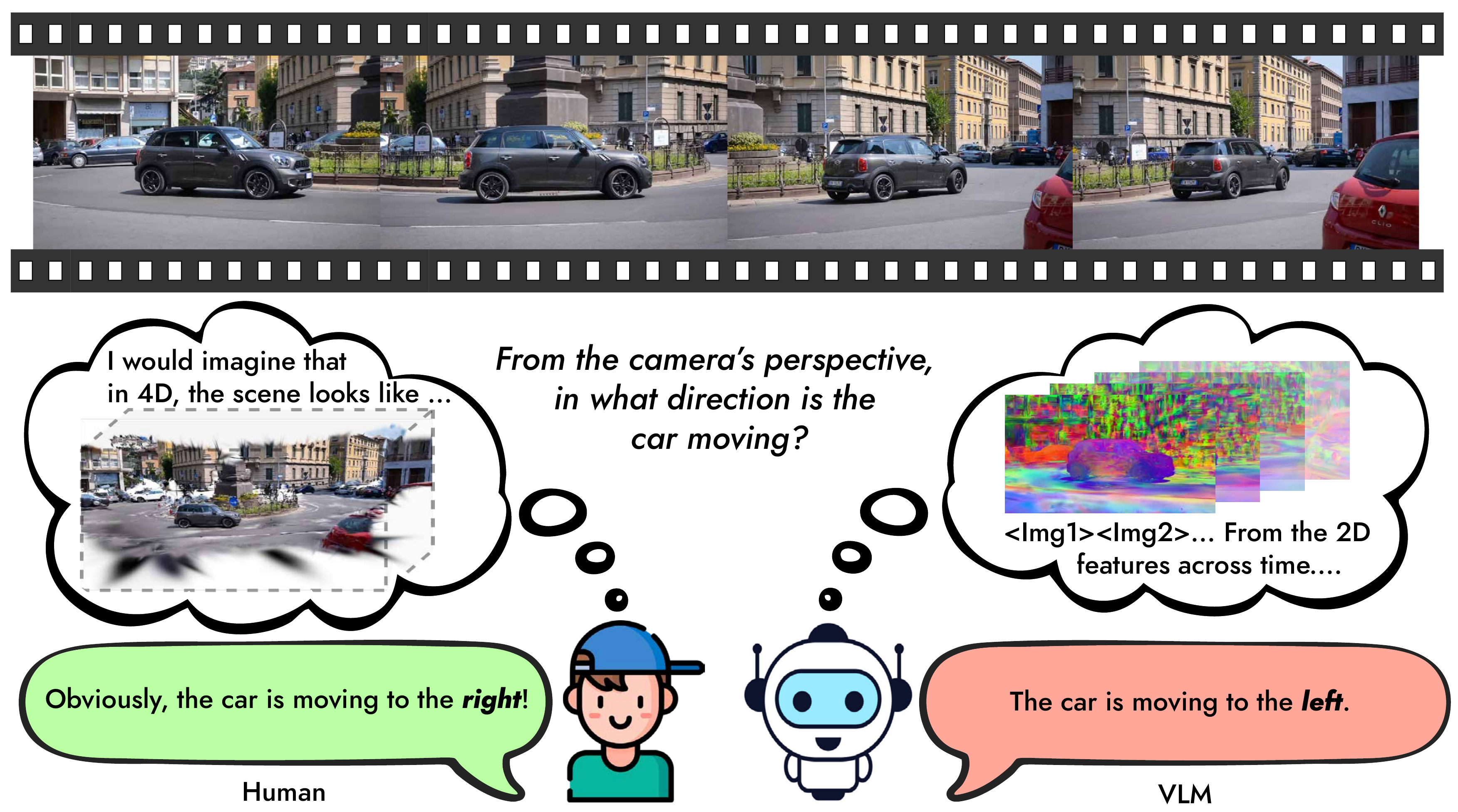

Spatiotemporal (4D) Awareness. Humans intuitively reason in 4D (3D space + time), effortlessly reconstructing the dynamic spatial trajectory of moving objects from any perspective. In contrast, current Vision Language Models (VLMs) typically rely on aggregating 2D visual features across time, leading to incorrect predictions when motion understanding and interpretation requires deeper spatiotemporal reasoning. In this example, humans correctly perceive the car moving to the right, while the VLM (GPT-4o) inaccurately predicts leftward movement, suggesting VLMs struggle to perform spatiotemporal reasoning.

Abstract

Vision language models (VLMs) have shown remarkable capabilities in integrating linguistic and visual reasoning but remain fundamentally limited in understanding dynamic spatiotemporal interactions. Humans effortlessly track and reason about object movements, rotations, and perspective shifts-abilities essential for robust dynamic real-world understanding yet notably lacking in current VLMs. In this paper, we introduce VLM4D, the first benchmark specifically designed to evaluate the spatiotemporal reasoning capabilities of VLMs. Our benchmark comprises diverse real-world and synthetic videos accompanied by carefully curated question-answer pairs emphasizing translational and rotational motions, perspective awareness, and motion continuity. Through comprehensive evaluations of state-of-the-art open and closed-source VLMs, we identify significant performance gaps compared to human baselines, highlighting fundamental deficiencies in existing models. Extensive analysis reveals that VLMs struggle particularly with integrating multiple visual cues and maintaining temporal coherence. We further explore promising directions, such as leveraging 4D feature field reconstruction and targeted spatiotemporal supervised fine-tuning, demonstrating their effectiveness in enhancing spatiotemporal comprehension. Our work aims to encourage deeper exploration into improving VLMs' spatial and temporal grounding, paving the way towards more capable and reliable visual intelligence for dynamic environments.

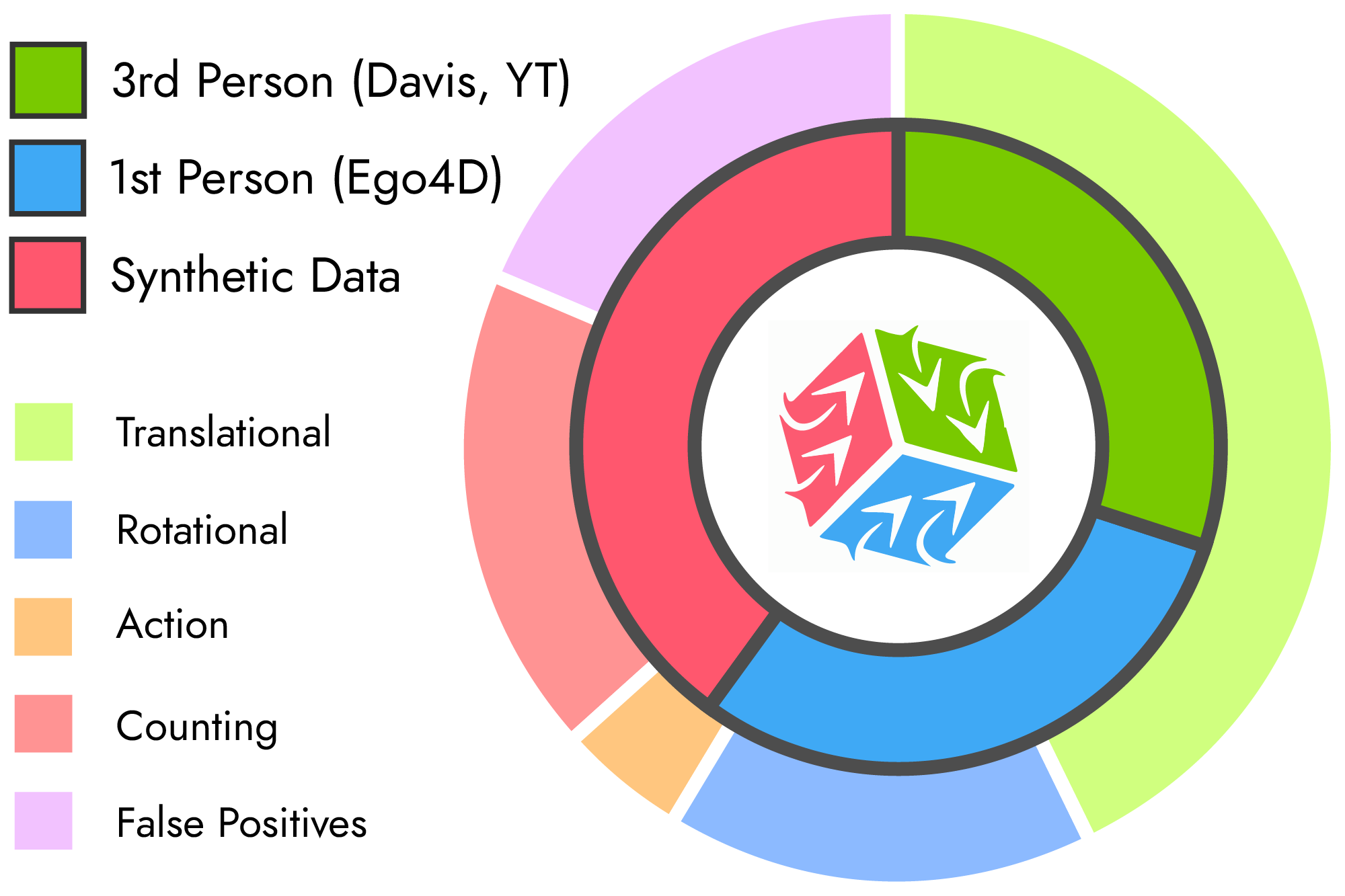

Distribution of Dataset Sources and Annotations.

Distribution of Dataset Sources and Annotations.

Overview of the dataset composition, illustrating the proportions of real third-person (exo-centric) videos (DAVIS, YouTube-VOS), real first-person (ego-centric) videos (Ego4D), and synthetic videos (Cosmos). The real video data is further categorized by annotation types, including translational, rotational, action, counting, and false positive queries (targeting nonexistent events to assess critical reasoning).

Interactive Demo

Interactive Demo

Test your spatiotemporal reasoning skills with our VLM4D benchmark questions

Which direction is he running toward?

Model Performance

Model Performance

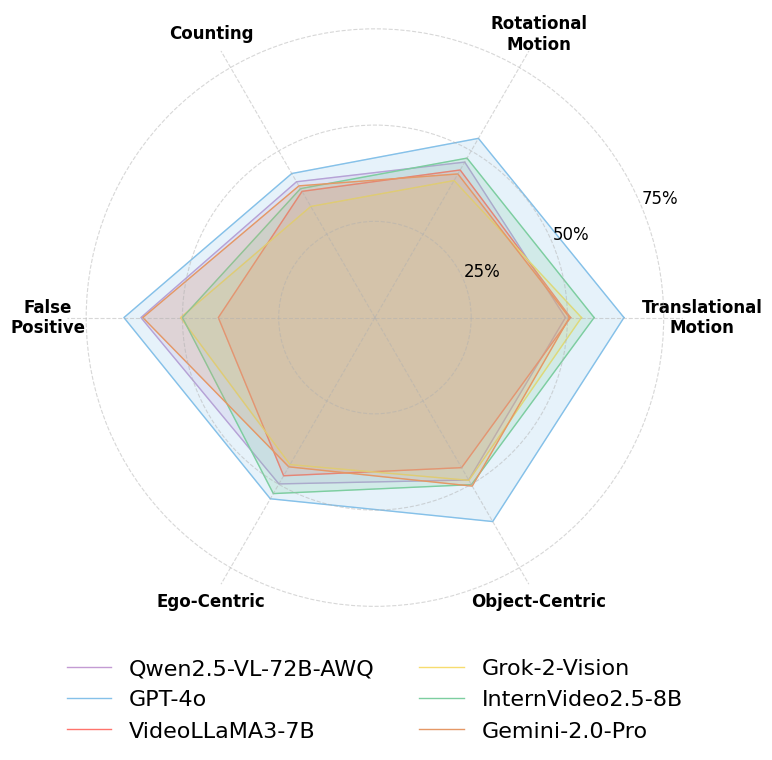

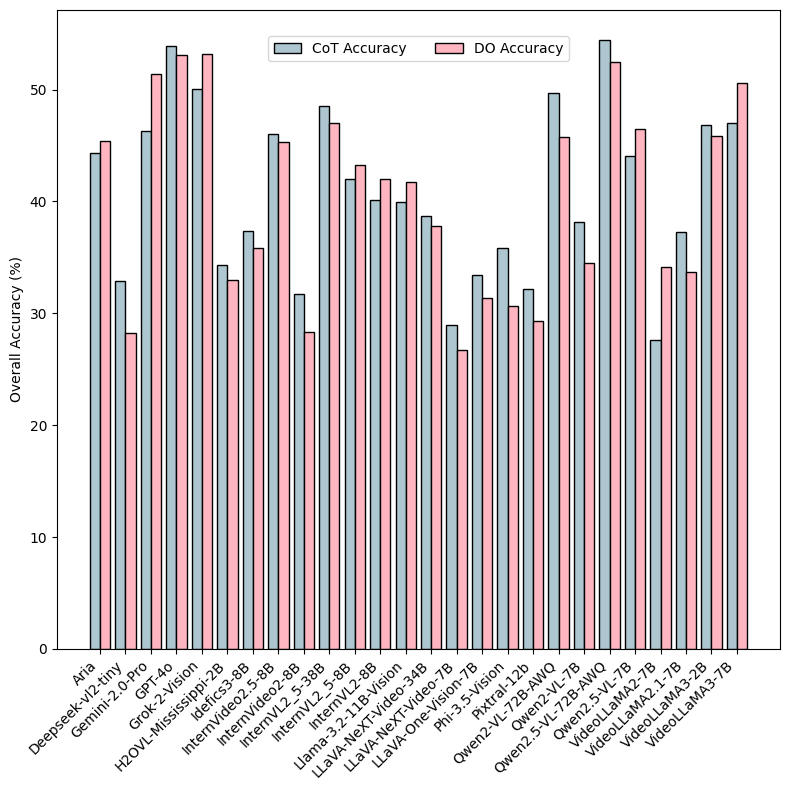

(Left) Comparison of accuracy across types of spatiotemporal questions. (Right) Accuracy comparison between Chain-of-Thought (CoT) and Direct Output (DO) prompting across VLMs.

Leaderboard

Leaderboard

We report the evaluation using CoT on VLM4D Benchmark across various proprietary and open-source VLMs.

| Organization | Model | Release | Real | Synthetic | Overall | ||||

| Ego-centric | Exo-centric | Average | Directional | FP | Average | ||||

| User Study | Human Performance | 99.6 | 99.7 | 99.7 | 95.8 | 100 | 96.2 | 98.8 | |

| Random | Random Selection | 24.4 | 23.2 | 23.6 | 25.5 | 24.7 | 25.4 | 24.1 | |

| Latest Proprietary VLMs | |||||||||

| OpenAI | GPT-4o | 2024-11 | 55.5 | 62.2 | 60.0 | 49.5 | 53.3 | 49.9 | 57.5 |

| Gemini-2.5-Pro | 2025-6 | 64.6 | 62.9 | 63.5 | 54.8 | 80.0 | 57.3 | 62.0 | |

| Anthropic | Claude-Sonnet-4 | 2025-5 | 52.6 | 52.1 | 52.2 | 44.0 | 86.7 | 48.3 | 51.3 |

| xAI | Grok-2-Vision | 2024-12 | 48.8 | 49.7 | 49.4 | 49.3 | 66.7 | 51.0 | 49.8 |

| Open-source Image VLMs | |||||||||

| Meta | Llama-4-Maverick-17B | 2025-4 | 52.6 | 54.3 | 53.8 | 53.3 | 51.1 | 53.0 | 53.6 |

| Llama-4-Scout-17B | 2025-4 | 48.6 | 56.2 | 53.7 | 53.3 | 75.6 | 55.5 | 54.1 | |

| Microsoft | Phi-4-Multimodal | 2025-3 | 41.0 | 35.4 | 37.2 | 37.5 | 11.1 | 34.8 | 36.6 |

| Phi-3.5-Vision | 2024-7 | 33.4 | 38.8 | 37.1 | 23.3 | 37.8 | 24.7 | 34.0 | |

| DeepSeek | DeepSeek-VL2 | 2024-12 | 33.6 | 32.9 | 33.1 | 31.8 | 46.7 | 33.3 | 33.2 |

| Shanghai AI Lab | InternVL2.5-38B | 2024-11 | 46.6 | 50.1 | 48.9 | 43.3 | 57.8 | 44.7 | 47.9 |

| InternVL2.5-8B | 2024-11 | 39.0 | 44.0 | 42.4 | 40.8 | 42.2 | 40.9 | 42.0 | |

| Mistral AI | Pixtral-12B | 2024-9 | 32.3 | 25.8 | 27.9 | 24.3 | 22.2 | 24.0 | 27.0 |

| Rhymes | Aria | 2024-11 | 47.2 | 44.0 | 45.1 | 38.5 | 71.1 | 41.8 | 44.3 |

| Open-source Video VLMs | |||||||||

| Alibaba | Qwen2.5-VL-7B | 2025-1 | 42.3 | 43.7 | 43.3 | 43.5 | 64.4 | 45.6 | 43.8 |

| Qwen2.5-VL-72B | 2025-1 | 54.3 | 52.5 | 53.1 | 49.5 | 80.0 | 52.6 | 53.0 | |

| Qwen2-VL-7B | 2024-8 | 36.1 | 34.7 | 35.2 | 40.5 | 35.6 | 40.0 | 36.3 | |

| Qwen2-VL-72B | 2024-9 | 48.1 | 43.0 | 44.6 | 40.8 | 73.3 | 44.0 | 44.5 | |

| DAMO | VideoLLama3-2B | 2025-1 | 53.2 | 42.5 | 46.0 | 34.3 | 55.6 | 36.4 | 43.7 |

| VideoLLama3-7B | 2025-1 | 49.4 | 45.1 | 46.5 | 42.8 | 53.3 | 43.8 | 45.9 | |

| Shanghai AI Lab | InternVideo2.5-8B | 2025-1 | 57.2 | 50.5 | 52.7 | 44.3 | 46.7 | 44.5 | 50.7 |

| InternVideo2-8B | 2024-8 | 35.6 | 39.3 | 38.1 | 43.0 | 0.0 | 38.7 | 38.2 | |

| LLaVA | LLaVA-One-Vision-7B | 2024-9 | 36.8 | 35.6 | 36.0 | 37.8 | 35.6 | 37.5 | 36.3 |

| LLaVA-NeXT-Video-34B | 2024-6 | 29.6 | 31.6 | 30.9 | 24.5 | 55.6 | 27.6 | 30.1 | |

BibTeX

@inproceedings{zhou2025vlm4d,

title={VLM4D: Towards Spatiotemporal Awareness in Vision Language Models},

author={Zhou, Shijie and Vilesov, Alexander and He, Xuehai and Wan, Ziyu and Zhang, Shuwang and Nagachandra, Aditya and Chang, Di and Chen, Dongdong and Wang, Eric Xin and Kadambi, Achuta},

booktitle={Proceedings of the IEEE/CVF international conference on computer vision},

pages={8600--8612},

year={2025}

}